Models keep you secured in the enterprise vibe

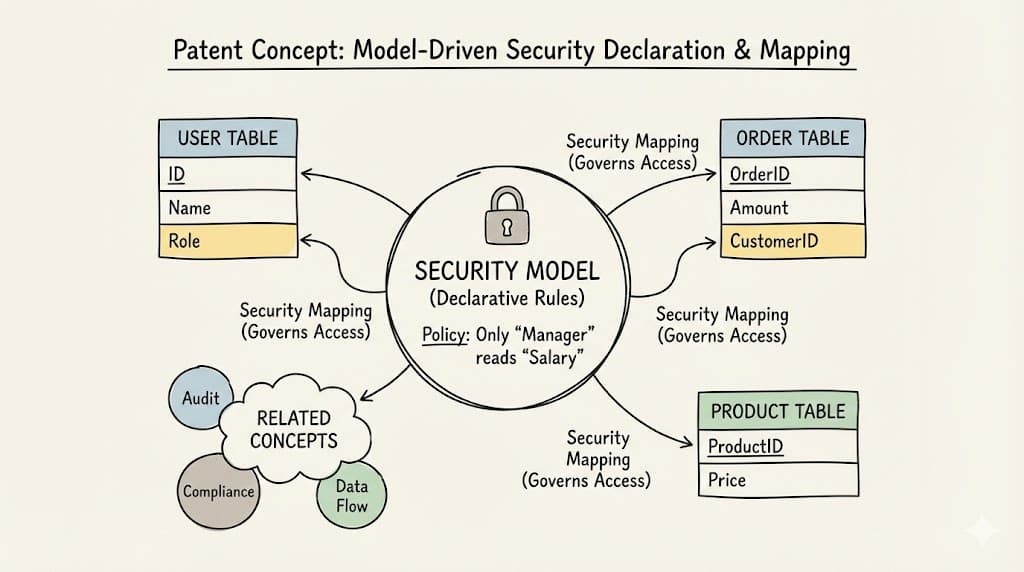

In 2013, I filed a patent for "Model-based security control".

At the time, "AI" was barely a whisper in the boardroom. My team and I were focused on a very human problem: Spaghetti Security.

We saw that when developers wrote code, they inevitably buried security logic inside business logic. A check for “UserRole = Manager” would be hardcoded on line 42, then forgotten on line 80.

Our solution was radical but simple: Pull security out of the code. Make it declarative. Define it in the Model (DSL).

If security is a property of the data and the architecture—not the code—then a developer cannot accidentally bypass it. The platform enforces the rules, no matter what code you write.

Fast forward to 2026.

That patent isn't just about cleaning up human code anymore. It is the missing link that turns "Vibe Coding" from a cool prototype into an enterprise superpower.

Vibe Coding is the Engine. The Model is the Steering.

I love the concept of Vibe Coding. The ability to express intent ("I want a dashboard for sales data") and have an AI generate the logic is a massive leap forward.

But in the enterprise, "vibes" aren't enough.

When an LLM "vibe codes," it is guessing the syntax. It writes imperative code based on patterns it has seen. But it often lacks the architectural context.

- Does it know who is allowed to see that sales data?

- Does it know the compliance rules for that region?

- Does it know the dependency impact on other modules?

If you rely on the AI to "remember" to write those security checks in the code, you are rolling the dice. Recent reports suggest nearly half of AI-generated code snippets contain security gaps when left unmanaged.

How DSLs Supercharge AI

This is where the 2013 patent logic becomes critical again.

We don't need to stop Vibe Coding. We need to empower it with a Domain Specific Language (DSL).

When you have a strong DSL (like the OML system we use at OutSystems), the "Vibes" (the AI's intent) get compiled through the "Model" (the Guardrails).

- The Model (DSL): Declaratively defines the security ("Only Managers see Salary").

- The AI (Vibe Coding): Generates the flow ("Show me the user list").

- The Result: The platform accepts the AI's intent but automatically injects the security filters required by the Model.

The AI doesn't need to be a security expert. It just needs to be a logic expert. The DSL handles the safety.

Governed Autonomy

This is how Vibe Coding wins in the enterprise.

We aren't blocking the AI; we are giving it a safe sandbox. We are moving toward "Governed Autonomy."

This approach allows us to deploy Agents that are creative and fast ("Vibe Coding") but strictly bound by business rules ("The Model").

I didn’t know it in 2013, but by defining security in the model, we were building the chassis that allows AI to drive safely at speed today.